![]()

Global Optimization

Short introduction

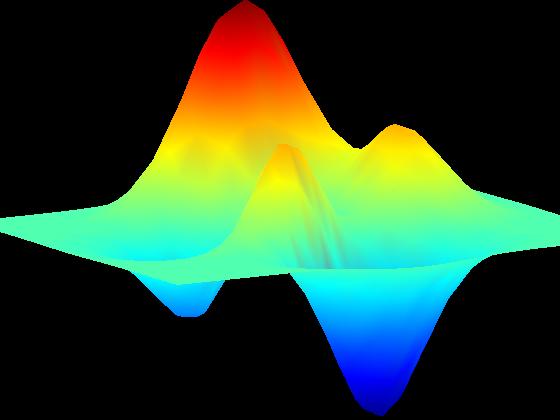

Global Optimization is the art of finding low-lying minima in high dimensional and rough functions. There are various applications of these methods to different fields of both science and industry. These include the design of electronic circuits, the optimization of flight plans or trade-routes, the well-known traveling salesman problem, Lennard-Jones clusters, spin-glasses or protein folding. In practical applications it is sometimes sufficient to find low-lying minima while some scientific question need the deepest minimum of the optimized function. One well-known subgroup of Global Optimizers are the Stochastic Optimization methods. These work by probing the functional surface with random moves. One typical example of such minimization is Simulated Annealing (SA). SA is an iterative method which works with a virtual temperature T. Each point of the functions domain is seen as a state of the system and the function f(x) is interpreted as an energy E(x) which is to be minimized (therefore exploring a potential energy surface (PES)). Starting from a random initial state each step of a SA-minimization is testing the energy of a nearby random state x'. This state x' is accepted as starting point for the next step of the SA algorithm if min(exp(-delta E/(kB T)),1) is smaller than random(1) (delta E=E(x)-E(x'), kB is the Boltzmann's constant, T is the virtual Temperature, random(1) is a random number between 0 and 1). Otherwise the state stays unchanged and x' is simply discarded. Afterwards the temperature is slightly lowered (typically in a geometric fashion) and a new random change of the state is tried till a sufficiently low temperature is reached. One can easily see that downhill moves are always accepted while energetically unfavored moves are more likely to be accepted when the temperature is high. Theoretically SA is guaranteed to find the lowest energy as long as each change is done adiabatically and the ergodicity of the changes of states in insured (ergodicity means basically that each point of the space for which E(x) is defined is reachable in a limited number of steps from each other point). However in actual simulations ensuring this adiabatic behavior is far too slow to be of any practical use. Therefore the temperature is lowered very quickly which doesn't meet any adiabatic requirement anymore and can result in a freezing of the simulation which means it got stuck in a local minimum. This problem of freezing is common to all stochastic minimization methods. One can never be sure having found the global optimum. Therefore one should never trust a single simulation since it is likely that lower lying minima exist.

My contribution to this field (also see publications)

My work concentrated on developing and testing different stochastic optimization methods specifically effective for application to structure prediction of a free-energy function for protein folding. Using vanilla SA we were unable to find very deep minima on the free-energy surface. Therefore we needed to develop and test more efficient methods for this task. One very promising global optimizer is STUN (stochastic tunneling), which we slightly modified for its application in protein folding. It works by a dynamic transformation of the optimized function thus enabling an easy crossing of high barriers during the optimization process. This enabled us to find an estimation of the global minimum which agreed very well with experimental data for the investigated protein. Another successfull method for usage on computer clusters is parallel tempering (PT). It uses a multitude of concurrent parallel runs at different temperatures. Crucial to the success of PT is the exchange between temperature levels which allows a large-scale exploration of the functional space. We introduced a dynamic adaptation of the temperatures during the simulations and a replication of good states. These two changes to classical PT strongly improved its performance. To allow the usage of inhomogeneous computer networks we developed a server-client model as a evolutionary model where a central server keeps a population of structures which evolve (are optimized) on remote computers. The challenge here is that all computers may or not finish their optimization runs and both the connection speed and computational power of the computers are inhomogeneous. This allows the usage of computers all over the world via the Internet, a method which is also successfully applied by, for example, folding@home. We refined our evolutionary model and successfully predicted the structure of a 60 amino-acid protein on an all-atom level using no information from protein databases. It shows that the population strongly enhances its native content during the simulations. All these methods, among other which we used and tested (for example basin hopping) should a strong increase in efficiency compared to applying them in a not-problem specific adaptation. We gained in general more than one order of magnitude in the efficiency for the optimization algorithm and were able to find better estimations of the global minimum.